Our next guest post is by Vinayak Naik, Associate Professor and Founding Coordinator of the MTech in CSE with specialization in Mobile Computing at IIIT-Delhi, New Delhi, India. He writes about his group’s research on using smartphone sensors to measure metro traffic in New Delhi.

In recent years, mobile sensing has been used in myriad of interesting problems that rely on collection of real-time data from various sources. The field of crowd-sourcing or participatory sensing is extensively being used for data collection to find solutions to day-to-day problems. One such problem of growing importance is analytics for smart cities. As per Wikipedia, a smart city is defined as an urban development vision to integrate multiple information and communication technology (ICT) solutions in a secure fashion to manage a city’s assets and notable research is being conducted in this direction. One of the important assets is transportation. With the growing number of people and shrinking land space, it is important to come up with solutions to reduce pollution caused by the vehicles and ease commuting between any two locations within the city.

In recent years, mobile sensing has been used in myriad of interesting problems that rely on collection of real-time data from various sources. The field of crowd-sourcing or participatory sensing is extensively being used for data collection to find solutions to day-to-day problems. One such problem of growing importance is analytics for smart cities. As per Wikipedia, a smart city is defined as an urban development vision to integrate multiple information and communication technology (ICT) solutions in a secure fashion to manage a city’s assets and notable research is being conducted in this direction. One of the important assets is transportation. With the growing number of people and shrinking land space, it is important to come up with solutions to reduce pollution caused by the vehicles and ease commuting between any two locations within the city.

Today, cities in developed countries, such as New York, London, etc, have millions of bytes of data being logged on a diurnal basis using sensors installed at highway and metro stations. This data is typically leveraged to carry out an extensive analysis to understand usage of roads and metro across the city’s layout and aid in the process of better and uniform city planning in long term. However, a common challenge faced in developing countries is the paucity of such detailed data due to lack of sensors in the infrastructure. In the absence of these statistics, the most credible solution is to collect data via participatory sensing using low-cost sensors like accelerometer, gyroscope, magnetometer, GPS, and WiFi that come packaged with modern day smartphones. These sensors can be used to collect data on behalf of users, which upon analysis can be leveraged in the same way as data made available through infrastructure-based.

In short term, this data can be leveraged to guide commuters about expected rush at the stations. This is important as in some extreme cases wait time at stations could be more than the travel time itself. Aged people, those with disabilities, and children can possibly avoid traveling if there is rush at stations. We show two snapshots of platform at Rajiv Chowk metro station in Delhi, in Figure 1(a) and Figure 1(b). These figures show variation in the amount of rush that can happen in a real life scenario. In the long run information from our solution can also be integrated with route planning tools (e.g., Google Maps, see Figure 1(c)), to give an estimated waiting time at the stations. This will help those who want to minimize or avoid waiting at the stations.

About a decade ago, even cities in developed countries had less infrastructural support to get sensory data. The author was the lead integrator of the Personal Environmental Impact Report (PEIR) project in 2008. The objective of the project was to estimate emission and exposure to pollution for the city of Los Angeles [1] in USA. PEIR used data from smartphones to address the problem of lack of data. Today, the same approach is applicable in developing countries, where reach of smartphones is more than that of infrastructure-based sensors. At IIIT-Delhi, master’s student Megha Vij, co-advised by Prof. Viswanath Gunturi and the author, worked her thesis [2] on the problem to build a model, which can predict the metro station activity in the city of New Delhi, India using accelerometer logs collected from a smartphone app.

Our approach is to detect commuter’s entry into metro station and thereon measure time spent by the commuter till he/she boards the train. Similarly, we measure the time spent from disembarking the train until exiting the metro station. These two time-durations are indicative of the rush at metro stations, i.e., more the rush, more the time spent. We leverage geo-fencing APIs on the smartphones to detect entry into and exit from the metro stations. These APIs efficiently use GPS to detect whether the user has crossed a boundary, in our case perimeter around the metro station. For detecting boarding and disembarking, we use accelerometers on the smartphones to detect whether the commuter is in a train or not. Unlike geo-fencing, the latter is an unsolved problem. We treat this problem as a two class classification, where the goal is to detect whether a person is traveling in a train or is at the metro station. Furthermore, the “in-metro-station” activity is a collection of many micro-activities including walking, climbing stairs, queuing, etc and therefore, needs to be combined into one single category for the classification. We map this problem to machine learning and explore an array of classification algorithms to solve it. We attempt to use discriminating, both probabilistic and non-probabilistic, classification methods to effectively distinguish such patterns into “in-train” and “in-metro-station” classes.

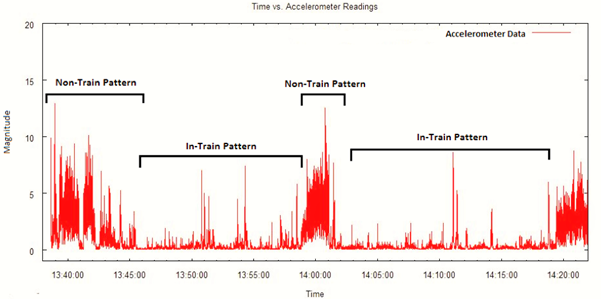

Figure 2 illustrates “in-train” and “in-metro-station” classes on sample accelerometer data that we collected. One may observe a stark difference in accelerometer values across the two classes. The values for the”in-train” class have less variance, whereas for the “in-metro-station” class, we observe heavy fluctuations (and thus much greater variance). This was expected since a train typically moves at a uniform speed, whereas in a station we would witness several small activities such as walking, waiting, climbing, sprinting, etc.

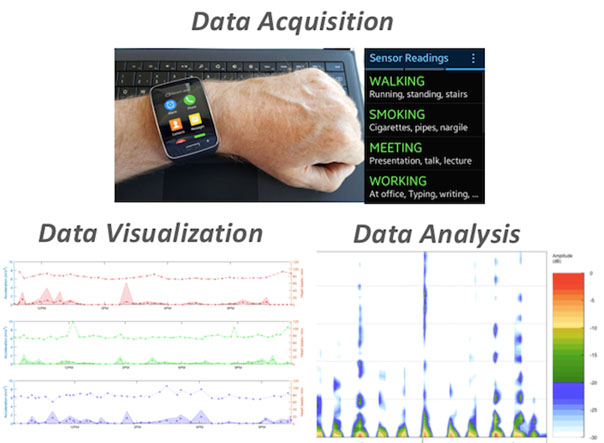

It is important to note that this problem is not limited to only analytics for smart cities, e.g., our work is applicable for personal health. Smart bands and watches are used these days to detect users’ activities, such as sitting, walking, and running. At IIIT-Delhi, we are working on these problems. A standardized data collection and sharing app for smartphones would leapfrog our research. CrowdSignals.io fills this need aptly.

[1] PEIR (Personal Environmental Impact Report)

http://www.cens.ucla.edu/events/2008/07/18/cens_presentation_peir.pdf

[2] Using smartphone-based accelerometer to detect travel by metro train

https://repository.iiitd.edu.in/jspui/handle/123456789/396

the

the

We live in exciting times. In the cognitive sciences, the big news for the last twenty or thirty years has been the ability to look inside the functioning brain in real time. A lot has been learned but, as always, science is hard and progress occurs in fits and starts. A critical piece that has been missing is the ability to characterize the environment in which people operate. In the early 1990s, John Anderson introduced rational analysis, which uses statistical analyses of environmental contingencies in order to understand the structure of cognition. Despite showing early promise, the method was stymied by a lack of technologies to collect the environmental data. Now the situation has changed. Smartphones, watches and other wearables are starting to provide us with access to environmental data at scale. For the first time, we can look at cognition from the inside and outside at the same time. Efforts such as

We live in exciting times. In the cognitive sciences, the big news for the last twenty or thirty years has been the ability to look inside the functioning brain in real time. A lot has been learned but, as always, science is hard and progress occurs in fits and starts. A critical piece that has been missing is the ability to characterize the environment in which people operate. In the early 1990s, John Anderson introduced rational analysis, which uses statistical analyses of environmental contingencies in order to understand the structure of cognition. Despite showing early promise, the method was stymied by a lack of technologies to collect the environmental data. Now the situation has changed. Smartphones, watches and other wearables are starting to provide us with access to environmental data at scale. For the first time, we can look at cognition from the inside and outside at the same time. Efforts such as